- Getting Started

- Creating a Connection

- Configuring Apache Iceberg as a Destination

- Streaming data into Tabular

- Reading metadata with pyiceberg

- Summary

Airbyte is an open-source ELT (extract, load, transform) platform that allows you to sync data from applications, APIs, and databases. These are then immediately loaded into data warehouses, data lakes, and other destinations. The aim with ELT is to mirror datasets across different sources and destinations, before applying transformation logic in downstream steps.

Airbyte added support for Apache Iceberg as a destination in late 2022. Before this connector existed, the only data lake support was an S3 destination that wrote the files like Parquet to S3, but didn’t handle the Iceberg metadata. Recently, REST catalog support was added, which further enables streaming data into Tabular.

Tabular is a cloud-native data platform built on Apache Iceberg and combines the strong SQL guarantees and behavior of a data warehouse with the flexibility to use any processing engine like a data lake.

Getting Started

At the time of this writing, REST catalog support hasn’t been officially released by Airbyte. Still, we can build and deploy Airbyte from the latest master branch as follows:

# clone Airbyte from GitHub

git clone https://github.com/airbytehq/airbyte.git

# switch into Airbyte directory

cd airbyte

# start Airbyte

./run-ab-platform.sh

- In your browser, just visit http://localhost:8000

- By default, username, and password are set to

airbyte/password - Please follow the official Airbyte docs in case of any issues

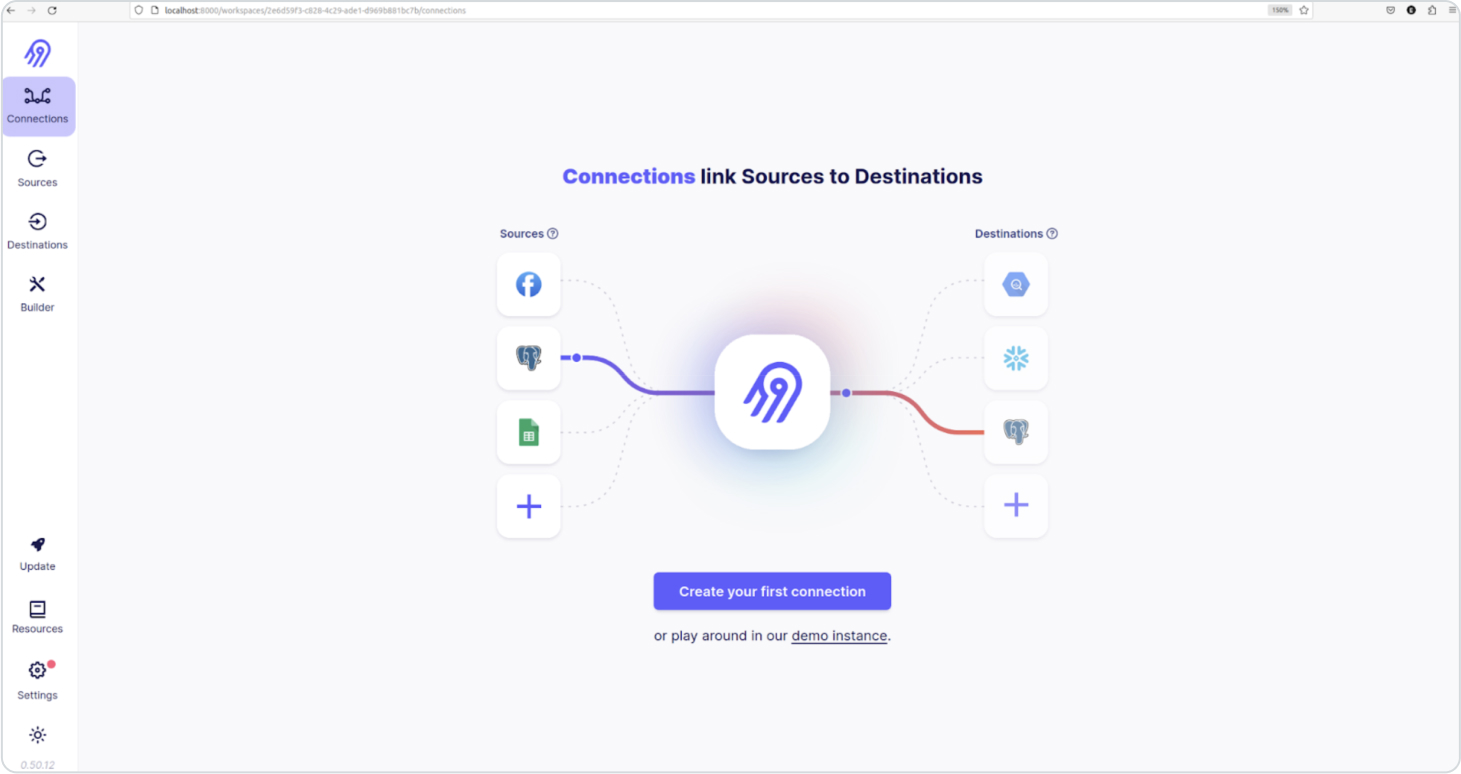

Once the platform is ready, the following screen should be visible:

Creating a Connection

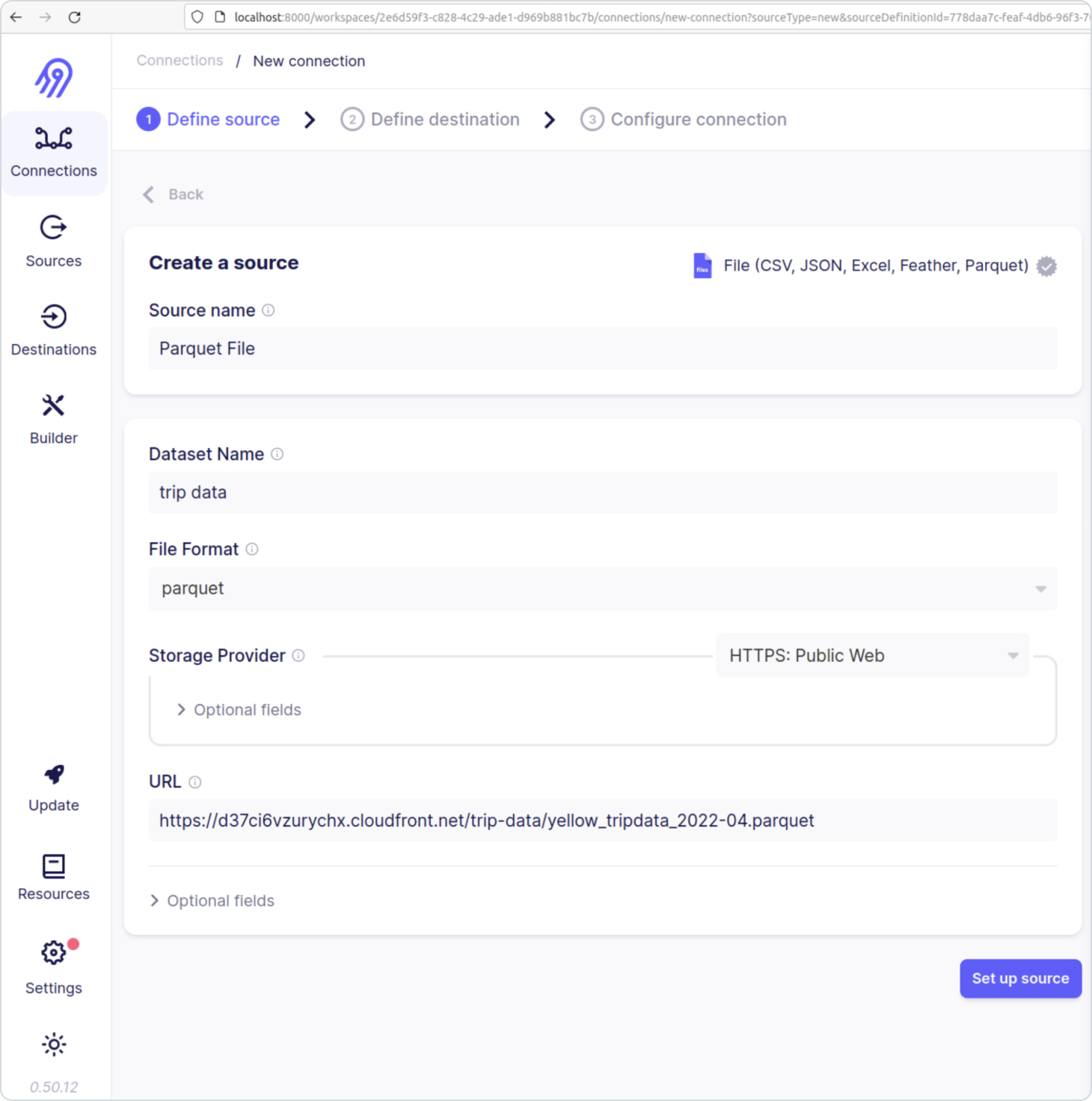

For this demo, we would like to load data from a Parquet file into Apache Iceberg using one of the Parquet files from the TLC Trip records data set.

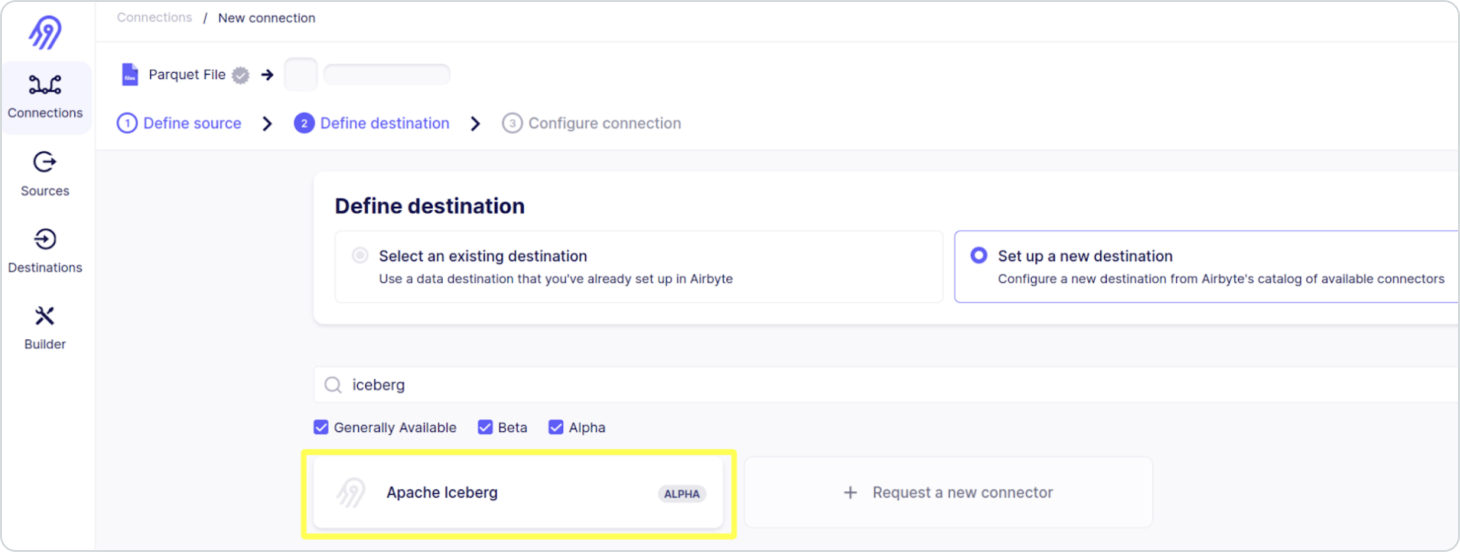

Once the source is configured to use a particular Parquet file, we can select Apache Iceberg as the destination.

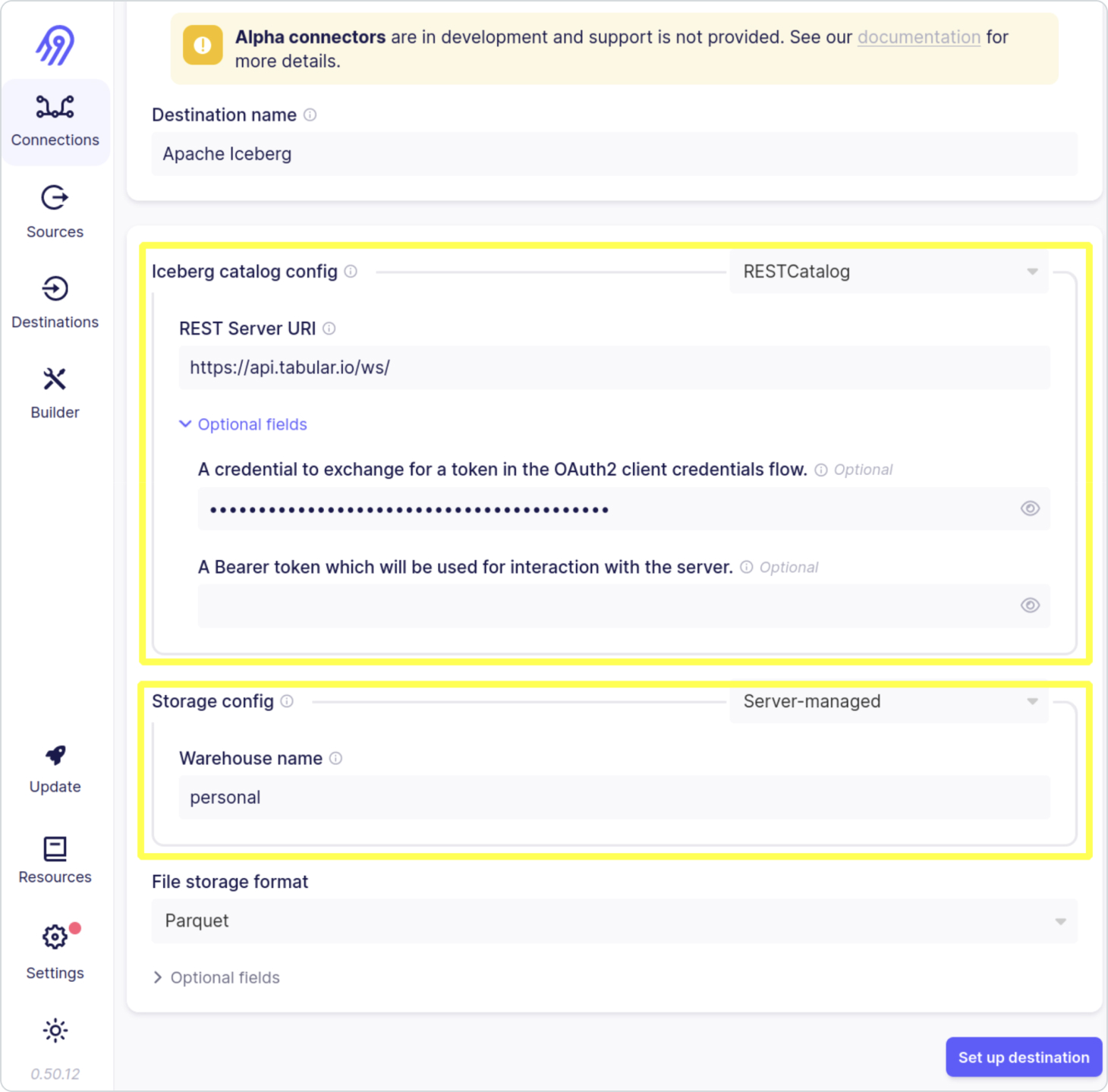

Configuring Apache Iceberg as a Destination

Airbyte currently supports the following Apache Iceberg catalogs:

- HiveCatalog

- HadoopCatalog

- JdbcCatalog

- RESTCatalog

And the following storage options are available:

- S3

- Server-managed

We will select RESTCatalog and server-managed storage for connecting with Tabular, specifying the REST server URI to point to the Tabular platform and a previously created OAuth2 credential.

As indicated in the storage config, we would like to stream data into a Tabular warehouse called personal.

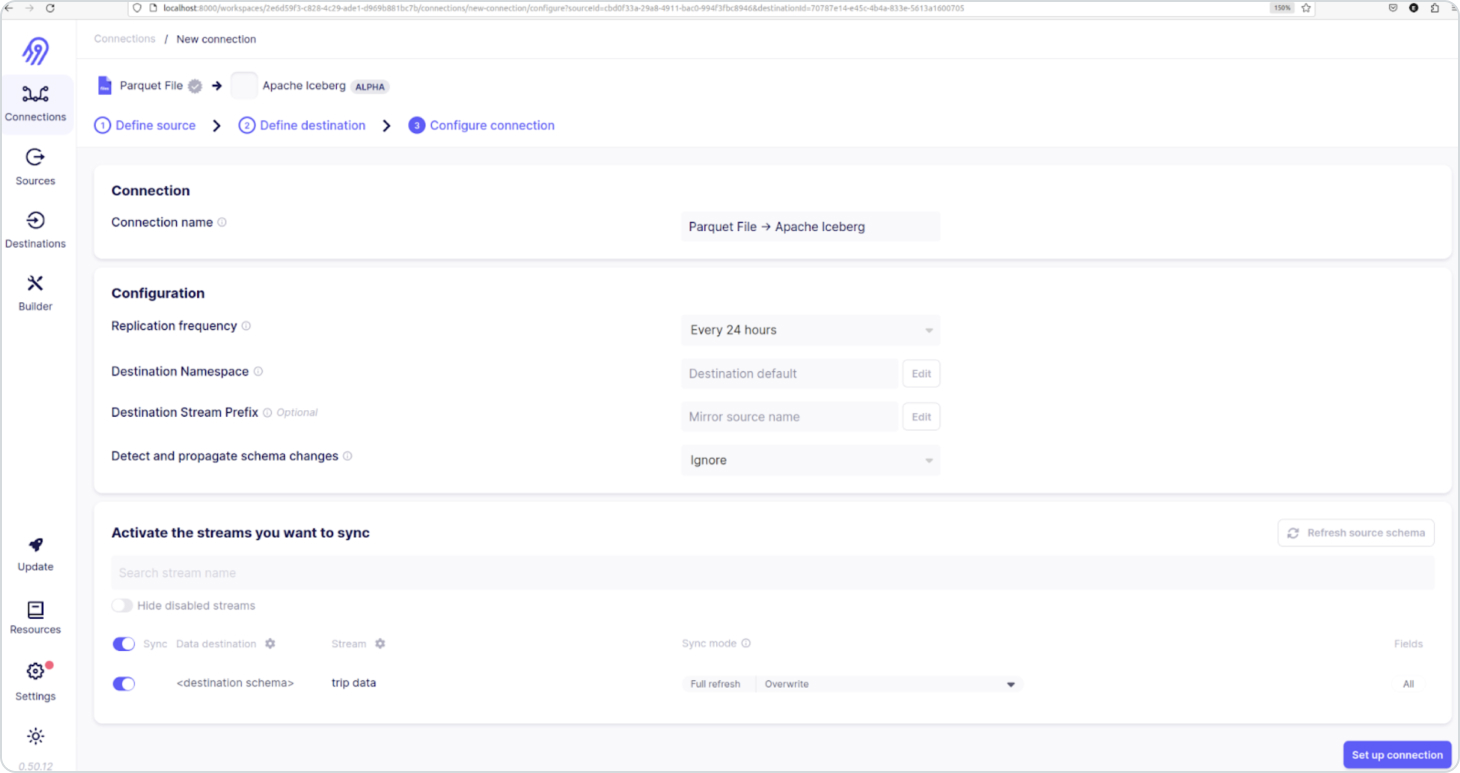

After the destination passes some connection tests, we can define the replication frequency, the destination namespace, and a few other things.

Streaming data into Tabular

Once we hit Set up connection, Airbyte will start streaming data from the Parquet file into Tabular.

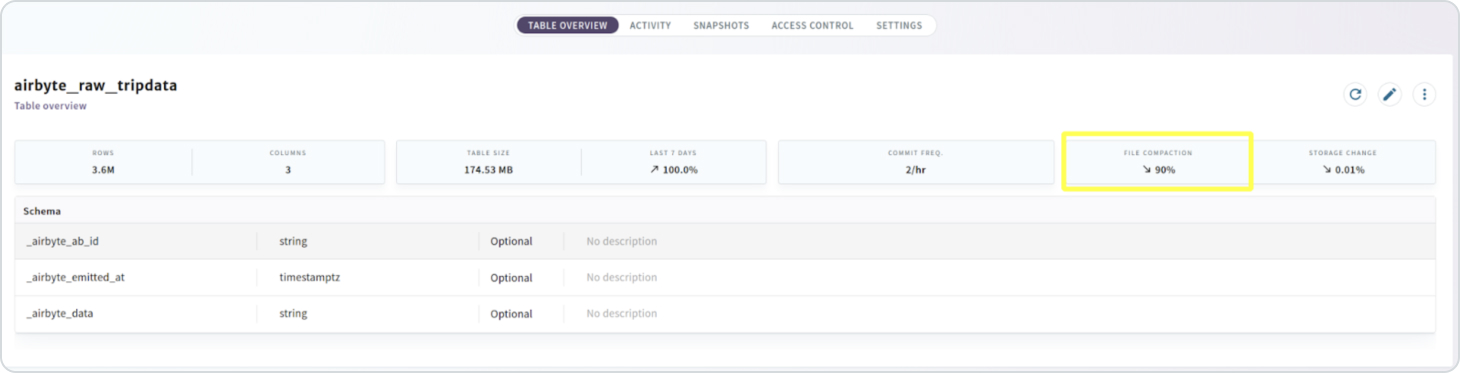

The incoming Airbyte data is structured in keyspaces and tables and is partitioned and replicated across different nodes in the cluster. This connector maps an incoming stream to an Iceberg table and a namespace to an Iceberg database. Fields in the Airbyte message become different columns in the Iceberg tables. Each table will contain the following columns.

_airbyte_ab_id: A randomly generated uuid._airbyte_emitted_at: a timestamp representing when the event was received from the data source._airbyte_data: a JSON text representing the extracted data.

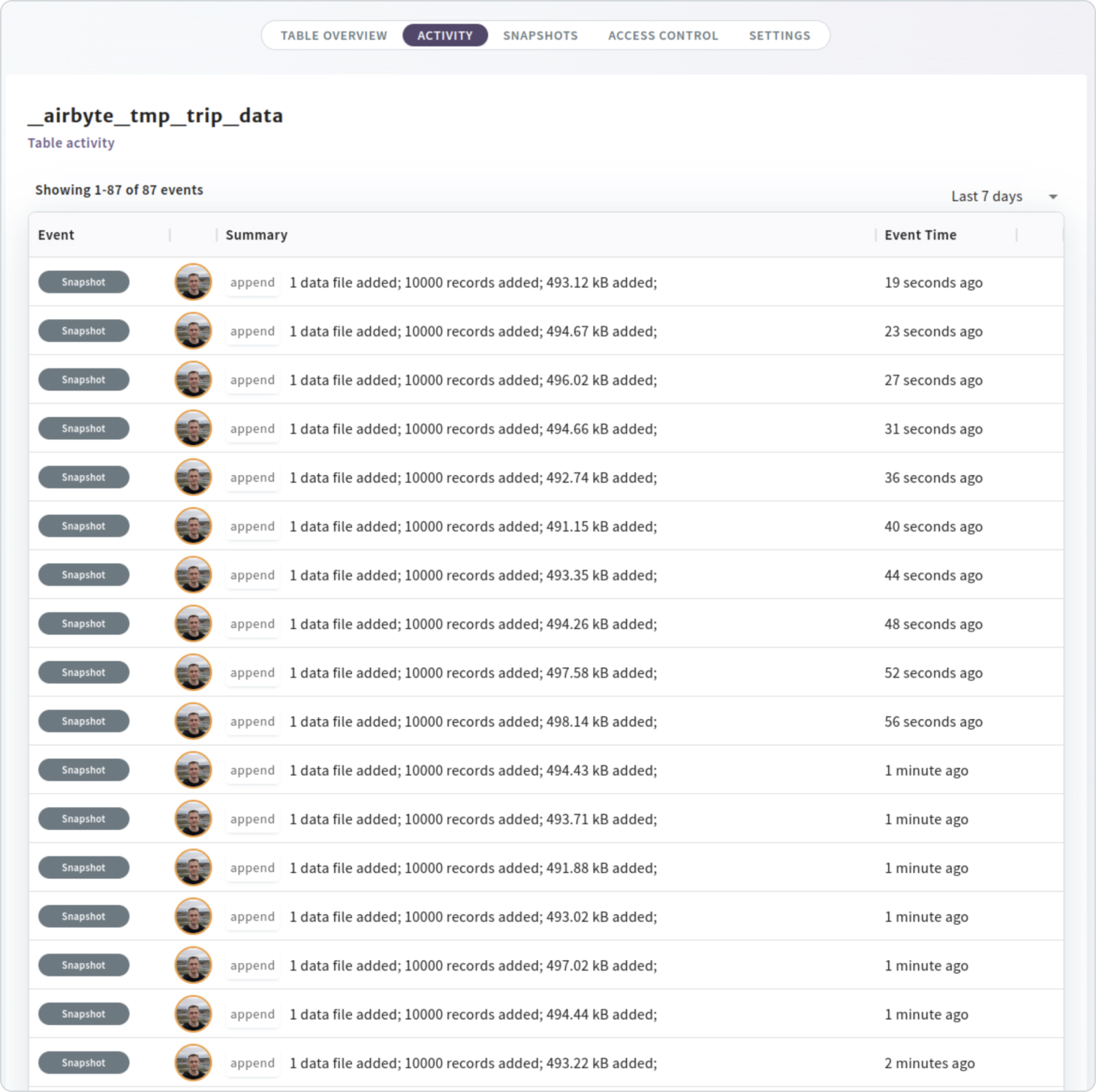

By default, data will be streamed into a table named default._airbyte_tmp_trip_data.

As indicated below, the Activity log of default._airbyte_tmp_trip_data shows 10K records being appended at a time.

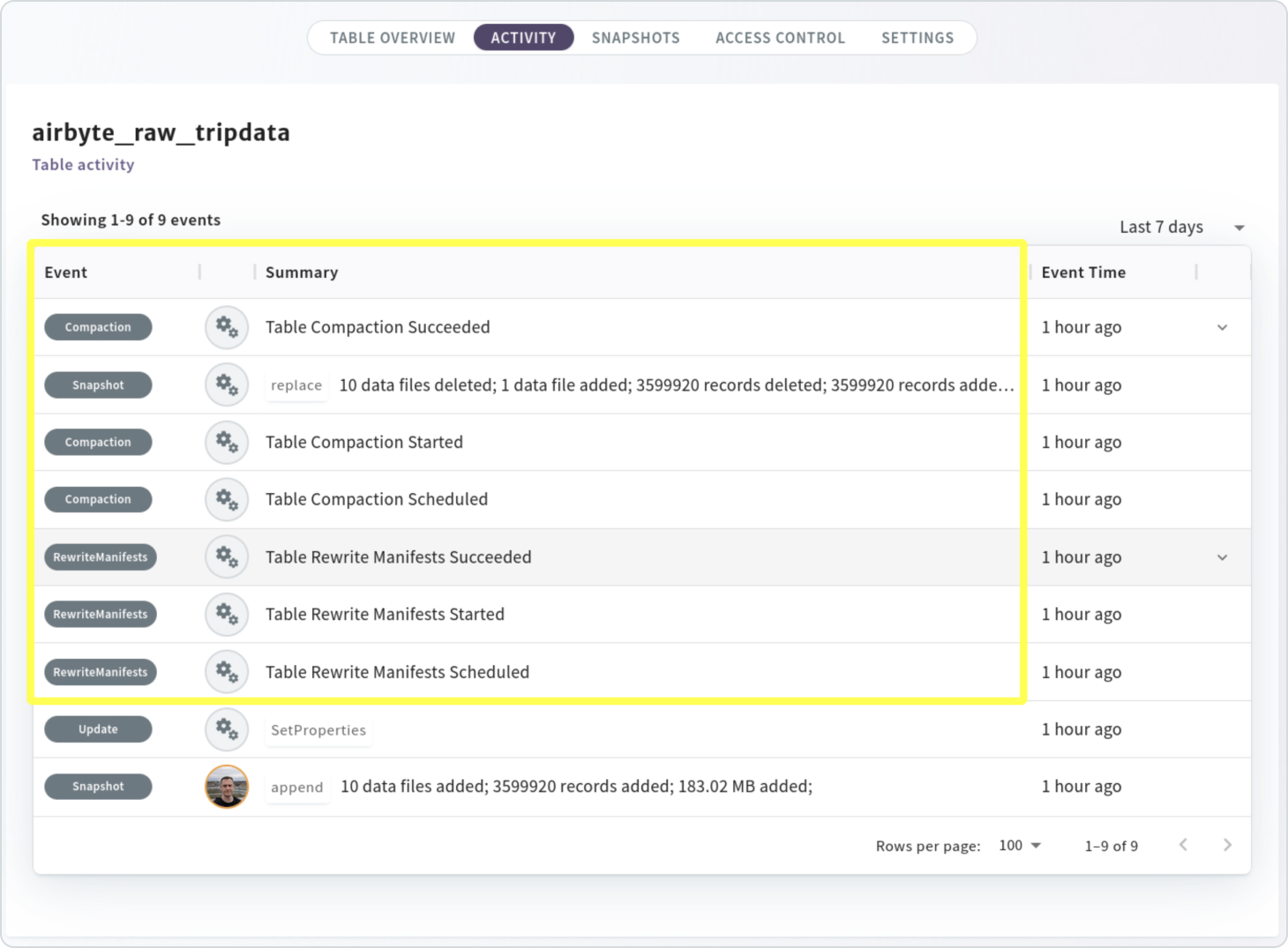

The Activity log also shows that automatic maintenance was running on the table as indicated below:

In this particular case, table compaction was able to reduce files by 90%.

Reading metadata with pyiceberg

Using pyiceberg we can examine the default._airbyte_tmp_trip_data table. In order to do that, we need a minimal configuration to connect to the Tabular platform, which is outlined below:

> cat ~/.pyiceberg.yaml

catalog:

default:

uri: https://api.tabular.io/ws/

credential: <credential used above>

warehouse: personal

Specifying the uri / credential / warehouse and then executing pyiceberg describe default._airbyte_tmp_tripdata allows a quick examination of the table’s metadata:

> pyiceberg describe default._airbyte_tmp_tripdata

No preferred file implementation for schema:

Table format version 1

Metadata location s3://<warehouse-location>/metadata/00251-19c4237f-f026-4d1c-b2d4-987b805ee424.gz.metadata.json

Table UUID 6662653a-ab25-441b-a335-98edb2c6830b

Last Updated 1691134224584

Partition spec []

Sort order []

Current schema Schema, id=0

├── 1: _airbyte_ab_id: optional string

├── 2: _airbyte_emitted_at: optional timestamptz

└── 3: _airbyte_data: optional string

Current snapshot Operation.APPEND: id=6854353204157235444, parent_id=4500555541869681149, schema_id=0

Snapshots Snapshots

├── Snapshot 2976328338228339171, schema 0: s3://<warehouse-location>/metadata/snap-2976328338228339171-1-84da42a0-6bdb-406f-a6e8-27bcc75e143a.avro

├── Snapshot 5928162284375542040, schema 0: s3://<warehouse-location>/metadata/snap-5928162284375542040-1-67ce2b70-3dc9-4416-8018-b2e894699f04.avro

├── Snapshot 2797379876376694124, schema 0: s3://<warehouse-location>/metadata/snap-2797379876376694124-1-7123b023-a8c5-4744-9772-24b37cd80bd5.avro

├── Snapshot 1470468110384586865, schema 0: s3://<warehouse-location>/metadata/snap-147046811038458686-1-7134ad5f-1156-4a6d-8629-24a67b740809.avro

.......many more snapshots......

Properties optimizer.enabled true

manifest-rewrite.submitted 2023-08-04T07:11:30.240761820Z

write.delete.parquet.compression-codec zstd

write.format.default parquet

creator-role-id 90dd06f2-3f3b-4149-a662-6d78a2cc2724

write.metadata.compression-codec gzip

write.parquet.compression-codec zstd

write.object-storage.enabled true

write.summary.partition-limit 100

Summary

We have seen how easy it is to stream data from an Airbyte-supported source via Apache Iceberg into Tabular. This comes with the additional benefit that our data is read and written in a secure manner as Tabular provides a centralized layer of security for your Apache Iceberg tables with regard to how various compute engines access those tables.

Tabular implements that access through the use of credentials (we provided this credential when setting up our destination above and when reading metadata with pyiceberg).

Additionally, automatic maintenance was able to reduce the amount of files by 90%, resulting in better performance when reading and writing and to an overall healthier table.

If you want to learn more about credentials in particular or about how Tabular secures the data lake, please visit one of the blog posts mentioned below:

- What are Tabular credentials?

- Securing the Data Lake – Part I

- Securing the Data Lake – Part II

- Securing the Data Lake – Part III

If you want to learn more about how Tabular keeps tables healthy, please visit: