Overview

- What is Tabular?

- What is Tabular NOT?

- What is Apache Iceberg?

- What’s a table format?

- Who contributes to the Iceberg project?

- What does Tabular add to Iceberg?

- Does Tabular have its own catalog?

- How does the Tabular catalog differ from other catalogs such as the Apache Hive metastore or the AWS Glue catalog?

- Can I use Tabular with the Hive Catalog or the AWS Glue Catalog?

- Iceberg is open source, but am I locked into Tabular?

- How does Tabular improve query performance?

- How does Tabular impact data engineers?

- On what cloud platforms does Tabular run?

- How well does Tabular scale?

- How is Tabular priced?

Getting Started

Support and compatibility

- Does Tabular work with my current data tools?

- What file types does Tabular support?

- With which compute/query engines can I use Tabular?

- With which storage services can I use Tabular?

- I’m an AWS customer. What value could Tabular add?

- I’m a Google Cloud Platform (GCP) customer. What value could Tabular add?

- I currently use Snowflake. Why would I also want to use Tabular?

- I currently use both a data lake and a data warehouse. Does Tabular work with both?

- How does Tabular File Loader handle schema?

Security and compliance

- How secure is Tabular’s deployment model?

- My company is in a highly-regulated industry. Does Tabular facilitate compliance with mandates such as HIPAA, SOX, and GDPR?

- How do I keep my data secure in Tabular/Iceberg?

- Will Tabular’s access controls prevent me from getting my job done?

- Is Tabular SOC2 compliant?

Overview

What is Tabular?

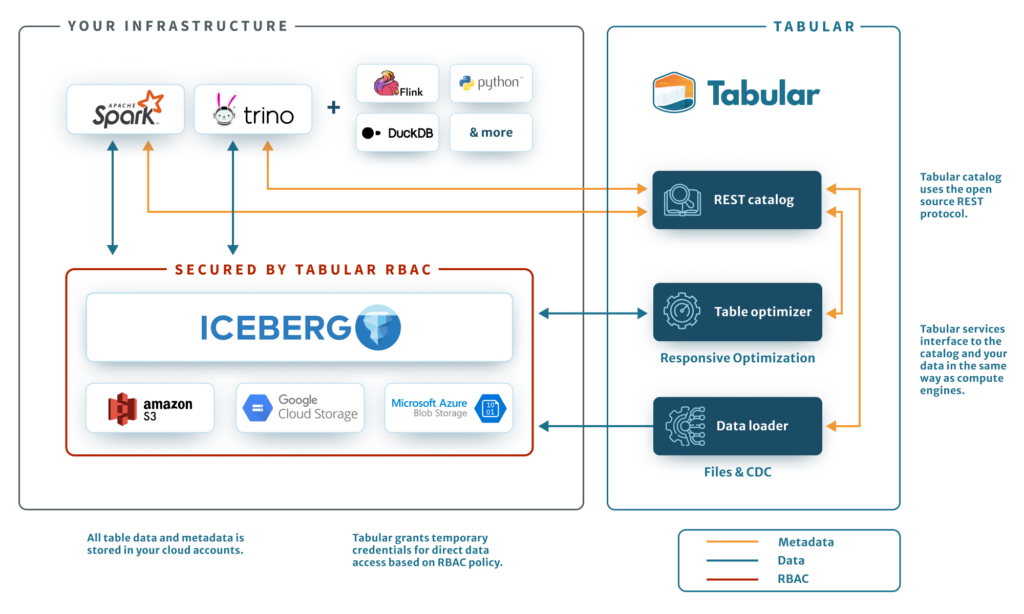

Tabular is a cloud-native managed storage engine that provides a range of services on top of Apache Iceberg tables. Functionally, you can think of it as a “headless data warehouse” to which you can connect one or more compute / query engines. It can provide external tables to a data warehouse such as Snowflake or interact with a data lake query engine such as AWS Athena.

Tabular makes it simple to ingest data into Iceberg tables, whether batch, streaming, or CDC. It optimizes Iceberg storage automatically to maximize efficiency, resulting in reduced data size and accelerated queries. It’s fully open – you can use it with any query engine – and includes a centralized role-based access control security layer.

Tabular provides three key value adds:

Load – Tabular makes it painless to bring data into Iceberg tables from files, CDC events from databases, and streaming events.

Optimize – Tabular intelligently determines the best way to organize data at the file level to speed queries and lower costs.

Secure – Tabular centralizes role-based access control down to the column, unifying security across various compute methods.

What is Tabular NOT?

Tabular is not a vertically-integrated data warehouse such as Snowflake, Redshift, Teradata, or Google BigQuery. It does not tightly couple storage with a query engine, but rather enables the connection of multiple 3rd-party query engines to a common, secured storage layer.

Also, while Tabular provides a data catalog and metadata management, it is neither a federated data catalog nor a metadata management platform.

What is Apache Iceberg?

Apache Iceberg is a high-performance open source format for analytic tables. Developed at Netflix and Apple and donated to the ASF, Iceberg was designed and built to make data practitioners more productive while solving the need for reliable transactions, query performance, and scalability up to 10s or 100s of petabytes.

Iceberg uses metadata to make data files contained in a table available for analysis and other processing. Metadata tells you what files are in a table and reveals the table’s schema and partition strategy. This in turn enables compute engines (such as AWS Athena, Trino, and Spark) to find and process only the files required to serve a particular query, eliminating inefficient and costly full data scans.

Benefits of the Apache Iceberg table format

What’s a table format?

A file format such as Parquet organizes the data it contains. A table format organizes data files so they can be stored and queried efficiently with consistent results. It’s much easier to analyze a discrete dataset than it is to group or analyze individual files.

Table formats bring database-like features to object storage in data lakes. A table format includes support for:

- ACID transactions (transactional guarantees).

- Schema evolution.

- Time travel.

- Performance enhancements.

Who contributes to the Iceberg project?

Iceberg gets contributions from a range of end user companies as well as unaffiliated data engineers. Iceberg is governed as an Apache project, and as of Q1 2024, the project had 458 contributors, 5200 merged PRs, and 602 open PRs.

Companies that have contributed to Iceberg along with Tabular include Netflix, Salesforce, Apple, LinkedIn, Amazon, Stripe, Alibaba, Lyft, and Cloudera.

What does Tabular add to Iceberg?

Tabular augments Iceberg tables with a range of automated data- and storage-related services that together significantly speed queries, sharply reduce storage cost, and save substantial engineering time. Tabular helps :

- Load – Includes built-in ingestion services for files, CDC and (coming soon) event streaming.

- Optimize – automatically handles most of the tedious data engineering, including per-table optimization settings and scheduling (e.g. compaction, compression, and clustering.

- Secure – provides centralized role-based access controls.

Visit our Product page for more detailed information.

Does Tabular have its own catalog?

Yes. Tabular created its catalog specifically for Iceberg tables. It supports many Iceberg features that enable data warehousing and add SQL database features to a data lake. The Tabular catalog implements the Iceberg REST specification that unlocks advanced database features such as commit deconfliction, safe version upgrades, and fast metadata access.

How does the Tabular catalog differ from other catalogs such as the Apache Hive metastore or the AWS Glue catalog?

The Tabular and Glue catalogs are built for different purposes.

Glue is a generic catalog and can track various formats, including Iceberg and Hive tables. However, it does not deliver database-like features. Tabular has deep integration with Apache Iceberg to enable features like ACID transactions, data/metadata maintenance, lifecycle policy, optimization, multi-table transactions and RBAC.

Can I use Tabular with the Hive Catalog or the AWS Glue Catalog?

Tabular is its own catalog that implements the Iceberg REST specification. It is separate from other catalogs. You can use it alongside other catalogs; Tabular makes it easy to migrate your existing tables from those catalogs to Tabular, which makes available to you the security and optimization and other performance-enhancing features of Iceberg.

Iceberg is open source, but am I locked into Tabular?

No, your data is formatted per the Iceberg specification and is completely portable.

Tabular and query engines all interact with your data through the open-source Iceberg format and library. This includes any engine that supports the Iceberg REST catalog. Tabular doesn’t build proprietary Iceberg extensions; we think it’s important that organizations should continue to be able to use the open-source project with any table and build it into any query engine.

Read more about Tabular and the Iceberg Community.

How does Tabular improve query performance?

Tabular applies all of the capabilities enumerated in the full Apache Iceberg specification to create and manage Iceberg tables in the most efficient way possible. This includes:

- Schemas and data

- Types

- Partitioning

- Sorting

- Manifests

- Snapshots

- Table metadata

- Delete formats

By way of illustration, Tabular automatically handles all storage-related Iceberg maintenance and optimization such as compacting small files and removing expired table snapshots. The Tabular table optimizer runs automatically on tables in a Tabular warehouse and optimizes the data layout based on various factors, including partitioning scheme, sort order, and compression codec. It continues to tune your tables as data changes or new query patterns emerge. Automatic optimization reduces data volume, consequently speeding up queries, as data volume is directly related to query performance.

How does Tabular impact data engineers?

Typically, it costs data engineers significant time and energy getting the details of job planning and execution just right. For example:

- the right size file for the job

- the partitioning strategy

- the sorting strategy

- more

Tabular removes this resource-intensive burden from the engineer. It automatically eliminates the need to manually and continually tune compute workloads to optimize storage. Tabular automatically plans and then executes the optimal job. It continuously optimizes tables, manages infrastructure systems, handles schema changes, and so on.

This frees up data engineers to focus on higher-value tasks.

On what cloud platforms does Tabular run?

Tabular is a cloud-native service available as a SaaS offering. It is generally available on AWS and is in private preview for Google Cloud Platform and Azure. Cloud object storage is hosted in the customer’s account. Support for MinIO storage is planned.

How well does Tabular scale?

In terms of scale, Iceberg was built to handle environments where a single table could contain 10s of petabytes. But it is flexible enough to be valuable to businesses of all sizes.

How is Tabular priced?

Tabular is a pay as you go (PAYG) service with no up-front or recurring flat fees. Tabular credits are based on data volume.

This consumption model gives you cost predictability; you know how much you’ll be charged because you know how much data you want to process. Note that the amount of data you have stored is irrelevant; you get charged only for processing, and all processing is optional on a per-table basis.

Tabular’s pricing plans include a full-featured free tier, so you can try out Tabular yourself, in a sandbox or with your production data, at no cost.

Here are more details on our price plans.

Getting started

Do I need to be an Iceberg expert before using Tabular?

Not at all. Tabular’s goal is to make Iceberg invisible so you feel like you’re working with a traditional data warehouse. On a day-to-day basis you can stop thinking about Iceberg altogether.

Are there prerequisites to using Tabular?

Besides having data in a cloud account, no. You can just sign up, create an account, and try it out right away.

What’s the learning curve like?

Brief to non-existent. Our goal is to make Iceberg invisible. You don’t need to learn anything; Tabular tables just work as you’d expect. There’s no need to ask questions such as, “Can I safely rename columns in this table?” These problems disappear, so you can focus on work – not configuring and debugging pipelines.

So how does Tabular actually work?

You can do everything you need via the Tabular UI. After signing up, you create an account and establish an organization. Then:

- Create a warehouse

- Create a database

- Invite members

- Create one or more member roles

- Apply role-based access to your data at the table level

You can accomplish all of this within minutes, and start working with your data as you normally would, with whichever tools you’re already using. For specific steps, visit our YouTube Channel.

What are Tabular’s data ingestion capabilities?

Tabular can ingest events or files at scale in streaming, batch, and CDC modes. You create databases and Iceberg tables directly from the Tabular UI, and configure Tabular to continually ingest new data and populate your tables.

You can also easily kick off ad hoc data loads and mirror relational databases using CDC.

Tabular also works with AWS Kinesis and Apache Flink to deliver data to your warehouse with exactly-once semantics.

In the future, Tabular will offer a managed service for ingesting stream events.

Can I perform change data capture (CDC) with Tabular?

Yes. When you send CDC data into Iceberg, Tabular automatically materializes a merge table that is a mirror of the source. It automates hundreds of ETL jobs, handles all of the orchestration, and performs the CDC merge.

Tabular and Iceberg facilitate a number of patterns and techniques that help you build and expand business cases around CDC. Examples include CDC table mirroring using a change log pattern, ensuring transactional consistency in mirrored tables, and using merge patterns.

Support and compatibility

Does Tabular work with my current data tools?

Yes. You can use Tabular and Hive-like tables side-by-side within your existing infrastructure. Tabular tables work seamlessly with your existing data catalog.

What file types does Tabular support?

Tabular supports Apache Parquet, ORC, and Apache Avro. You can also ingest .csv, .tsv, and JSON files. For best results we recommend using Parquet.

With which compute/query engines can I use Tabular?

Because Tabular is based on Iceberg, you can use it with nearly any compute engine, including Snowflake, a range of AWS services, Spark, Trino, Hive, and DuckDB. For the complete list, see our Product page.

Tabular also provides a central security model that applies your access control policies consistently across all engines.

With which storage services can I use Tabular?

Tabular currently supports Amazon S3, with GCS support in preview.

I’m an AWS customer. What value could Tabular add?

Tabular bridges the gap between AWS compute services. It creates a unified data architecture in which Tabular connects seamlessly with any AWS engine or framework. Catalog, maintenance, and optimization are integrated and require no setup. Comprehensive security is achieved via built-in RBAC controls that apply everywhere, integrated with AWS IAM identities.

AWS customers are using Tabular today for enhancements such as:

- Securing their data lake

- Reducing their data footprint by 50% via automatic data loading and optimization

- Orchestrating CDC pipelines

I’m a Google Cloud Platform (GCP) customer. What value could Tabular add?

The Tabular GCP implementation is currently in preview. Please email info@tabular.io if you would like to participate or be informed of general availability.

I currently use Snowflake. Why would I also want to use Tabular?

Tabular’s automated optimization services ensure Snowflake can take full advantage of Iceberg’s native indexing capabilities. This notably improves query cost and performance. You also get shared governance.

When you configure the integration with Snowflake, Tabular mirrors all the Iceberg databases and tables to the target Snowflake account. Tabular automatically creates and updates these external Iceberg tables in Snowflake. In this way Snowflake is always accessing the most recent data in the table without the need for any additional pipelines.

Setting up the integration is simple and secure, and also enables you to use popular data lake engines such as Spark, Trino, and Apache Flink.

Here are more details on how Tabular works with Snowflake.

Reasons why to use Iceberg with Snowflake.

Note This is a one-way replication; mirrored tables are considered read-only.

I currently use both a data lake and a data warehouse. Does Tabular work with both?

Yes. Tabular is an independent, secure table store that unifies the benefits of data warehouses (performant queries) and data lakes (efficient scale). Use a single copy of your data and one set of access controls everywhere. Tabular supports queries from data warehouses like Snowflake (and soon Redshift and BigQuery) alongside data lake query engines such as Spark, Flink, Trino, Athena, and Starburst. Using Apache Iceberg, you can retain both the flexibility of the data lake and the reliable and efficient SQL typical of a data warehouse such as Snowflake.

How does Tabular File Loader handle schema?

The File Loader can be configured to automatically evolve the table schema to match the schema found in the incoming data files.

Security and compliance

How secure is Tabular’s deployment model?

Tabular is a SaaS service. Data is read from your cloud account, processed in Tabular’s account, and written back to your cloud.

The deployment model is:

- Isolated – Tabular runs in a separate AWS account

- Auditable – all data accesses are logged and available for inspection

- Fast – sets up in minutes

During setup, you create an IAM role (service account in GCP) that Tabular uses to delegate read/write access to your data and enforce your access control policy. All accesses (and attempts) are logged and shared with you so you can see exactly what is happening and validate against storage access logs.

My company is in a highly-regulated industry. Does Tabular facilitate compliance with mandates such as HIPAA, SOX, and GDPR?

In short – yes. In regulatory parlance, Tabular processes data; it doesn’t control data. You manage the lifespan of the data that lives in your warehouses. For example, you can configure whether to delete rows or mask columns when they pass an age threshold you specify. And you can configure the default maximum length of time you wish to keep expired snapshots or snapshot references.

Similarly, you can audit a table’s authorizations to verify that the people approved to grant write access to the table are distinct from people who possess write access to the table.

Tabular holds the current certifications:

- HIPAA

- SOC 2 Type 2

- PCI DSS

- STAR Level 1

Contact Tabular at privacy@tabular.io for help with any questions about your specific regulatory position.

How do I keep my data secure in Tabular/Iceberg?

Tabular uniquely uses a role-based access control (RBAC) model that operates at the storage layer across the various query engines you may use to access the data. You can set policies that apply at the warehouse, database, table or column level. We believe this is a superior access control model since it eliminates the complex, error prone nature of managing access via multiple query engines.

In this model, access to securable resources is allowed via privileges assigned to roles, which are in turn assigned to other roles (cascading rights) or users. This model is both different from and more secure than a user-based access control model, in which rights and privileges are assigned to each user or group of users. It is also more secure than assigning rights for each query engine in use, especially as some popular compute layers, such as Spark, do not offer native controls.

For more information, see our blog series on securing the data lake:

- Securing the Storage Layer

- Principle of Least Privilege

- Role-based Access Controls for securing data in a data lake

Will Tabular’s access controls prevent me from getting my job done?

No. Tabular makes it easy to request access to the tables you need and get the proper access from the start. Use any engine or framework and be confident your data is protected without locking down every engine separately. Access is managed using OAuth2 and OpenID Connect so your data is safe but easy to connect to when you need it.