In a previous post, we covered how to use docker for an easy way to get up and running with Iceberg and its feature-rich Spark integration. In that post, we selected the hadoop file-io implementation, mainly because it supported reading/writing to local files (check out this post to learn more about the FileIO interface.) In this blog post, we’ll take one step towards a more typical, modern, cloud-based architecture and switch to using Iceberg’s S3 file-io implementation, backed by a MinIO instance which supports the S3 API.

If you’re not familiar with what MinIO is, it’s a flexible and performant object store that’s powered by Kubernetes. To learn more about it you can head over to their site

at min.io!

Adding the MinIO Container

The easiest way to get a MinIO instance is using the official minio/minio image. Here’s what your docker compose file should look like after following the steps in the

Docker, Spark, and Iceberg: The Fastest Way to Try Iceberg! post.

version: "3"

services:

spark-iceberg:

image: tabulario/spark-iceberg

depends_on:

- postgres

container_name: spark-iceberg

environment:

- SPARK_HOME=/opt/spark

- PYSPARK_PYTON=/usr/bin/python3.9

- PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/opt/spark/bin

volumes:

- ./warehouse:/home/iceberg/warehouse

- ./notebooks:/home/iceberg/notebooks/notebooks

ports:

- 8888:8888

- 8080:8080

- 18080:18080

postgres:

image: postgres:13.4-bullseye

container_name: postgres

environment:

- POSTGRES_USER=admin

- POSTGRES_PASSWORD=password

- POSTGRES_DB=demo_catalog

volumes:

- ./postgres/data:/var/lib/postgresql/data

Add the following to include a container with the official minio/minio image.

...

minio:

image: minio/minio

container_name: minio

environment:

- MINIO_ROOT_USER=admin

- MINIO_ROOT_PASSWORD=password

ports:

- 9001:9001

- 9000:9000

command: ["server", "/data", "--console-address", ":9001"]

...

Using environment variables, this sets the MinIO root username and password to “admin” and “password”, respectively.

Creating a Bucket on Startup

Next, we’ll want to use the MinIO CLI to bootstrap our MinIO instance with a bucket. MinIO also offers an official image for the CLI, minio/mc.

By defining a simple entrypoint, we’ll also configure the CLI to connect to the MinIO instance and create a bucket which we’ll call ‘warehouse’.

...

mc:

depends_on:

- minio

image: minio/mc

container_name: mc

environment:

- AWS_ACCESS_KEY_ID=demo

- AWS_SECRET_ACCESS_KEY=password

- AWS_REGION=us-east-1

entrypoint: >

/bin/sh -c "

until (/usr/bin/mc config host add minio http://minio:9000 admin password) do echo '...waiting...' && sleep 1; done;

/usr/bin/mc rm -r --force minio/warehouse;

/usr/bin/mc mb minio/warehouse;

/usr/bin/mc policy set public minio/warehouse;

exit 0;

"

...

If the bucket already exists, the CLI container will fail gracefully.

Configuring S3FileIO

The file-io for a catalog can be set and configured through Spark properties. We’ll need to change three properties on the demo catalog to use

the S3FileIO implementation and connect it to our MinIO container.

spark.sql.catalog.demo.io-impl=org.apache.iceberg.aws.s3.S3FileIO

spark.sql.catalog.demo.warehouse=s3://warehouse

spark.sql.catalog.demo.s3.endpoint=http://minio:9000

We can append these property changes to our spark-defaults.conf in the tabulario/spark-iceberg image by overriding the entrypoint for our spark-iceberg

container. Additionally, we’ll need to set the AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY, and AWS_REGION environment variables for our MinIO cluster. The region

must be set but the value doesn’t matter since we’re running locally. We’ll just use us-east-1.

spark-iceberg:

image: tabulario/spark-iceberg

depends_on:

- postgres

container_name: spark-iceberg

environment:

- SPARK_HOME=/opt/spark

- PYSPARK_PYTON=/usr/bin/python3.9

- PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/opt/spark/bin:/opt/spark/sbin

- AWS_ACCESS_KEY_ID=admin

- AWS_SECRET_ACCESS_KEY=password

- AWS_REGION=us-east-1

volumes:

- ./warehouse:/home/iceberg/warehouse

- ./notebooks:/home/iceberg/notebooks/notebooks

ports:

- 8888:8888

- 8080:8080

- 18080:18080

entrypoint: /bin/sh

command: >

-c "

echo \"

spark.sql.catalog.demo.io-impl org.apache.iceberg.aws.s3.S3FileIO \n

spark.sql.catalog.demo.warehouse s3://warehouse \n

spark.sql.catalog.demo.s3.endpoint http://minio:9000 \n

\" >> /opt/spark/conf/spark-defaults.conf && ./entrypoint.sh notebook

"

At this point, the full docker compose file should look like this:

version: "3"

services:

spark-iceberg:

image: tabulario/spark-iceberg

depends_on:

- postgres

container_name: spark-iceberg

environment:

- SPARK_HOME=/opt/spark

- PYSPARK_PYTON=/usr/bin/python3.9

- PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/opt/spark/bin:/opt/spark/sbin

- AWS_ACCESS_KEY_ID=admin

- AWS_SECRET_ACCESS_KEY=password

- AWS_REGION=us-east-1

volumes:

- ./warehouse:/home/iceberg/warehouse

- ./notebooks:/home/iceberg/notebooks/notebooks

ports:

- 8888:8888

- 8080:8080

- 18080:18080

entrypoint: /bin/sh

command: >

-c "

echo \"

spark.sql.catalog.demo.io-impl org.apache.iceberg.aws.s3.S3FileIO \n

spark.sql.catalog.demo.warehouse s3://warehouse \n

spark.sql.catalog.demo.s3.endpoint http://minio:9000 \n

\" >> /opt/spark/conf/spark-defaults.conf && ./entrypoint.sh notebook

"

postgres:

image: postgres:13.4-bullseye

container_name: postgres

environment:

- POSTGRES_USER=admin

- POSTGRES_PASSWORD=password

- POSTGRES_DB=demo_catalog

volumes:

- ./postgres/data:/var/lib/postgresql/data

minio:

image: minio/minio

container_name: minio

environment:

- MINIO_ROOT_USER=admin

- MINIO_ROOT_PASSWORD=password

ports:

- 9001:9001

- 9000:9000

command: ["server", "/data", "--console-address", ":9001"]

mc:

depends_on:

- minio

image: minio/mc

container_name: mc

environment:

- AWS_ACCESS_KEY_ID=demo

- AWS_SECRET_ACCESS_KEY=password

- AWS_REGION=us-east-1

entrypoint: >

/bin/sh -c "

until (/usr/bin/mc config host add minio http://minio:9000 admin password) do echo '...waiting...' && sleep 1; done;

/usr/bin/mc rm -r --force minio/warehouse;

/usr/bin/mc mb minio/warehouse;

/usr/bin/mc policy set public minio/warehouse;

exit 0;

"

Start it Up!

Finally, we can fire up the containers!

docker-compose up

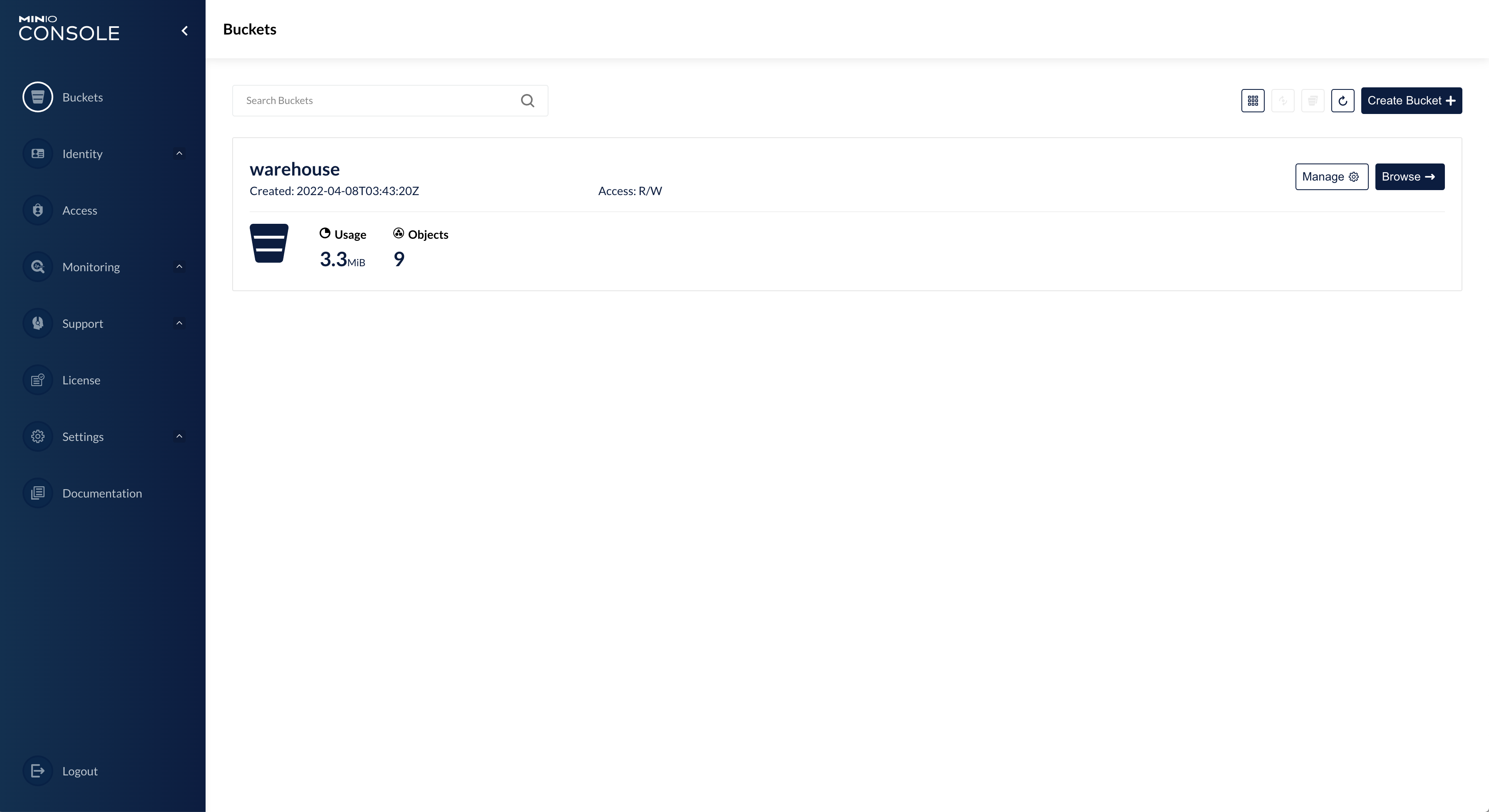

You can find the MinIO UI at http://localhost:9001 where you should see the ‘warehouse’ bucket. Now you can launch a spark shell or the notebook server, run any of the example notebooks, and watch the data and metadata appear in the MinIO bucket!